API Protection using In-memory Data Service

In today’s digital ecosystem, enterprises are monetizing their valuable digital assets by

converting and exposing them as APIs. While APIs are building blocks of a digital

ecosystem, exposing them to the outside world effectively and securely is key for their

success. This business strategy accomplishment (exchange of information and creating

an ecosystem, etc.,) is dependent on an API management stratagem that would leverage

a formidable API Gateway.

API Protection

Beyond effectively and securely exposing the APIs, protecting the API is also important

for enterprises to provide a sustained and reliable service to their partners and

consumers. Rate limit, quota, and throttling are strategies to protect their APIs from

unintended and malevolent overuse (of API) by limiting their consumption.

While these protection strategies help the organization avoid resource (API) starvation,

they can also improve the availability of services. In turn, these strategies may also act as

a defense mechanism against DoS and DDoS, Brute Force, and Credential stuffing

attacks.

In-Memory Data Store

With the native integration to the In-memory data store, a gateway can enforce GLOBAL Rate Limit and Quota, especially when you have a gateway deployed in a cluster with multiple nodes. Most of the API Management solutions should have a native policy that would track and enforce the rate limit and quota consumption across all the nodes in the cluster. These In-memory data stores are used to store or cache data such as message replay IDs, throughput quota counters, and node counts.

In-memory caching keeps frequently accessed data in process memory to avoid constantly fetching the same from a database, therefore, reducing overall latency, improving performance, and promoting HA for that data.

Distributed Key-Value Store

In-memory data store being a distributed key-value store, its ability to natively integrate further enables API Gateway to store and lookup:

- User session and manage Single Log-off

- Continuous Access Evaluation to would listen to Authentication Events

- Event Triggering Policies based on value changes to Map entry

Conclusion

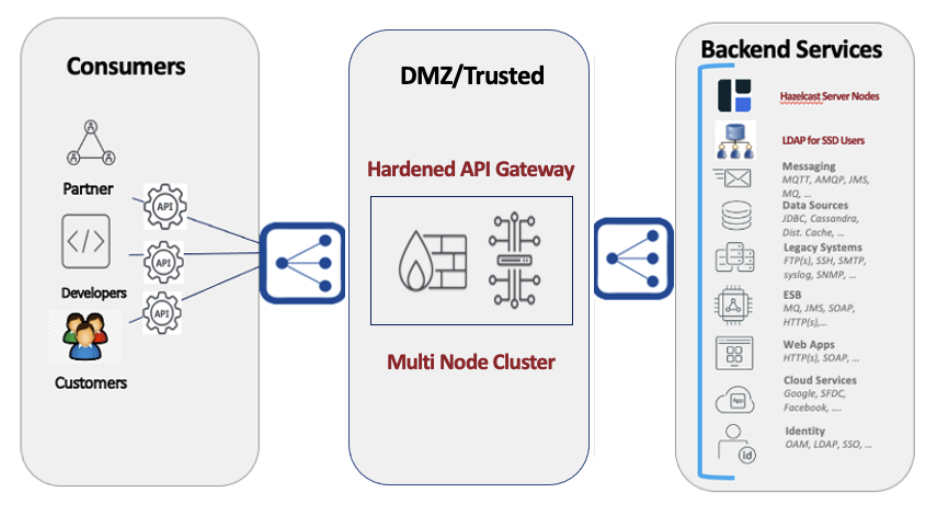

Nowadays more and more organizations are jumping into the bandwagon of container gateway deployment in the cloud for maintaining high availability. Most reputed or leading API Management solutions support multi-zone within a single or same region to improve high availability. Also, with Container form factor gateway cluster nodes can share data through a distributed shared memory grid like Memcache, Redis or Hazelcast. In other words, Gateway data is replicated across memory in different nodes and each node can be assigned one or more Gateway pods. This architecture enables High Availability, increases fault tolerance, reduces latency, and improves performance and scale.

Layer7 API Management has native integration with embedded or external HazelCast, which enables you to perform OOTB Global Rate limit, Quota, and many of the API protections (replay, DoS, DDoS, Brute Force, etc.,) we discussed above. With the help of custom policies, it also helps to enforce Single Log-off and Continues Access Evaluation scenarios using natively built store and lookup assertions.

If you don’t have Layer7 API Management, then you will need to ensure your gateway provider provides similar support via distributed or in-memory Key Value Store.